The Dialogue System was originally developed for a voice-acted RPG, so support for voice acting (lipsync) is a core design feature. The Dialogue System doesn't analyze audio or generate lip sync animation. Use a tool such as FaceFX, Reallusion's iClone, or Mixamo's FacePlus to generate the animation.

You can also use SALSA with RandomEyes to approximate lipsync. (See the SALSA with RandomEyes page for instructions.)

If you're using FaceFX's XML curves, use the FaceFX() command. You must first import the FaceFX third party support package located in Third Party Support/FaceFX Support.unitypackage.

If you're using SALSA with RandomEyes, use the SALSA() command. You must first import the SALSA with RandomEyes third party support package located in Third Party Support/SALSA with RandomEyes Support.unitypackage.

Mad Wonder's Cheshire Lip Sync generates Generic rig animations. You can add these to a layer (often a head layer) on your character's Animator Controller. Remember to set the weight of the layer to 1 so it takes effect.

Cheshire adds an animation event to the animation that plays its associated audio file. All you need to do is play the animation state, and the audio will be synchronized with it. To do this, use the AnimatorPlay() or AnimatorPlayWait() sequencer command. (AnimatorPlayWait() waits for the animation to finish.) For example, you could assign this to a dialogue entry's Sequence field:

AnimatorPlayWait(01northWindowModel)

Or, if you're Using entrytag To Simplify Sequences, you can set the Default Sequence to this to always return to the state "IdleHead" when done:

AnimatorPlayWait(entrytag)->Message(Done); AnimatorPlay(IdleHead)@Message(Done)

If you're using FacePlus, iClone, or another tool to generate FBX animations, how you use them in cutscene sequences depends on how you set up your avatar. Some developers set up a Humanoid Mecanim rig for the body and leave the face under Legacy animation control. In this case, use the Voice() sequencer command, which plays a legacy animation clip in conjunction with an audio (voiceover) file. Other developers put the face under Mecanim control, too, in which case you'll have an animation layer for the head. Simply use one of the many Mecanim sequencer commands, such as AnimatorPlay() or AnimatorTrigger(), to start the facial animation, and Audio() to start the voiceover audio.

If your project has many lines of dialogue, you can simplify the management of lines by using 'entrytag' as described in the section Using entrytag To Simplify Sequences.

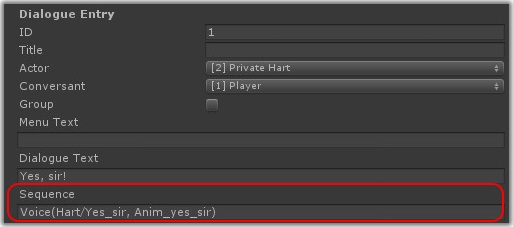

The following example describes how to make an NPC named Private Hart say, "Yes, sir!" with lip sync using the Voice() sequencer command.

In your project, create one or more Resources folders. Put your audio clip somewhere in a Resources folder:

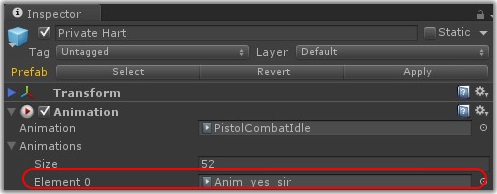

Add the lip sync animation clip to your character's legacy Animation component. Note that a character can have a legacy Animation component and a Mecanim Animator component as long as they control separate bones. In the Zombie Demo (http://www.pixelcrushers.com/dialogue-system-zombie-demo/), the characters' bodies are controlled by Mecanim, and their faces are controlled by legacy animation.

On the dialogue entry where Private Hart says, "Yes, sir!" add the Voice() command to the Sequence field. The first parameter is the audio clip. If you've organized your audio clips into subfolders (for example, a subfolder for each character), make sure to include the subfolder path. The second parameter is the animation clip. Remember that this must be in the list of animations in your character's Animation component.

When the conversation uses this dialogue entry, it will play the Voice() command in the Sequence field. This command will play the audio clip and the animation and wait until both are done.

<< How to Add Sequences to Conversations | How to Use Entrytags >>